Speech Recognition Performance under Noisy Conditions of Children with Hearing Loss

Article information

Abstract

Objectives

In order to understand the communicative abilities of hearing impaired children in noisy situations and their communication problems, this study was undertaken to examine speech recognition at different background noise levels, and to compare how context cues in noisy situations affect speech recognition.

Methods

Thirty-four children with severe/profound hearing impairment were enrolled. Fifteen children had cochlear implants (CIs) and 19 used hearing aids (HAs). The Mandarin Speech Perception in Noise (SPIN) test was performed under two levels of background noise, signal-to-noise ratio (SNR) 10 dB and SNR 0 dB (high and low levels, respectively). High predictive (HP) and low predictive (LP) sentences SPIN test scores were recorded to test the effect of context cues on speech recognition.

Results

Performance was significantly better in children with CIs (SNR 10: mean, 49.44, standard deviation [SD], 13.90; SNR 0: mean, 31.95, SD, 15.72) than in children with HAs (SNR 10: mean, 33.33, SD, 9.72; SNR 0: mean, 19.52, SD, 6.67; P<0.05) in both noise backgrounds, but no significant interaction was found between devices and background noise level. Hearing-impaired children performed better at SNR 10 dB (mean, 40.44; SD, 14.12) than at SNR 0 dB (mean, 25.0; SD, 12.98), significantly (P<0.001). Performance for HP sentences (mean, 38.6; SD, 12.66) was significantly (P<0.001) better than that for LP sentences (mean, 25.25; SD, 12.93). An interaction was found to between background noise level and contextual cues in sentences (F=8.47, P<0.01).

Conclusion

The study shows that SNR conditions significantly influence speech recognition performance in children with severe/profound hearing impairment. Under better SNR listening situations, children have better speech recognition when listening to sentences with contextual cues. Children with CIs perform better than children with HAs at both noise levels.

INTRODUCTION

Scientific auditory devices, such as, hearing aids and cochlear implants, have improved speech perception in quiet environments for the hearing impaired (1), but in real environments background noises are invariably present, and these can mask cues in speech messages, and thus, affect speech recognition and learning (2, 3). In order to assess the communicative abilities of hearing impaired children in noisy situations and to understand their communication problems, we undertook this study was to examine speech recognition at various background noise levels, and then sought to determine whether context cues in noisy situations affect speech recognition.

MATERIALS AND METHODS

Participants

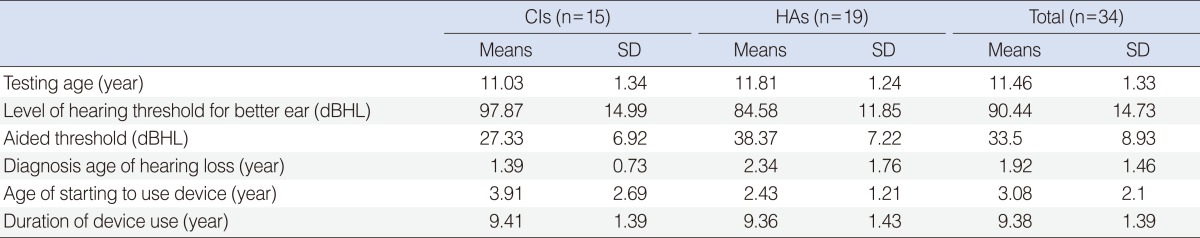

Thirty-four children with severe/profound hearing impairment were enrolled. There were 15 children with cochlear implants (CIs) and 19 children with hearing aids (HAs). All study subjects met the following criteria: 1) an age of over 10 years; 2) oral language was the only method of communication; 3) unaided threshold of pure-tone audiometry of the better ear of >70 dBHL; 4) auditory device use for at least 1 year, and 5) the ability to be assessed for open-set speech perception. Table 1 shows the demographic data of the study subjects. With the exception of items related to the hearing thresholds of better ears and aided thresholds, no significant difference was found between the CI and HA groups.

Testing materials & procedures

The Speech Perception in Noise (SPIN) test of the Mandarin version was composed of sentences presented in a babble-type noise. Listener response was the final word in the presented sentence (the keyword), which was always a monosyllabic word. Test items were presented at two different levels of noisy situations, that is, signal-to-noise ratio (SNR) 10 dB and SNR 0 dB. Stimuli and babbling noise were 60/50 dBHL at SNR 10 dB, and 50/50 dBHL at SNR 0 dB, and were delivered via a loudspeaker placed 1 M and at the 45 azimuth from subjects. Children responded by writing-down procedures, and their responses were scored as the percentage of words correctly identified. Two types of sentences were used: high predictability (HP) items, for which the key word was somewhat predictable based on context, and low predictability (LP) items, for which the final word could not be predicted from context. The scores of HP and LP sentences of the SPIN test were recorded separately to test the effect of context cues on speech recognition.

Two-way ANOVA was used for the statistical analyses and P-values of <0.05 were considered significant. Two interaction relationships were analyzed. First, we tested the effects of independent variables (devices and SNR conditions) on the dependent variable (speech recognition). Second, we test the effects of the independent variables (contextual cues and SNR conditions) on the dependent variable (speech recognition).

RESULTS

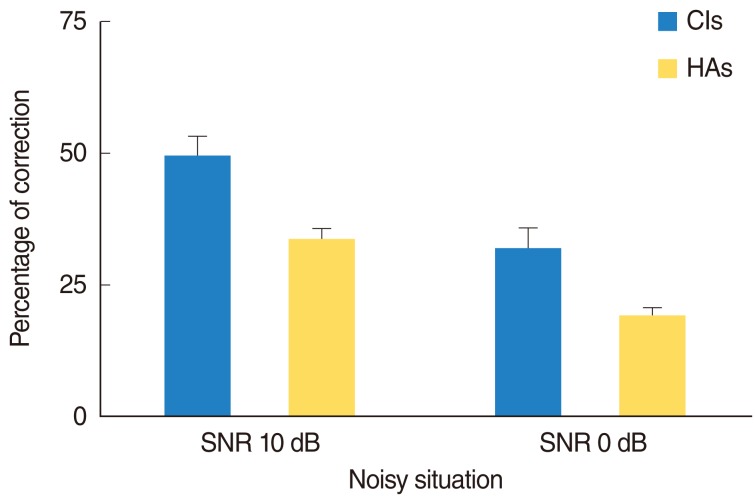

Two-way ANOVA (Table 2) demonstrated the main effects of both devices and SNR conditions on speech recognition were significant, but that the interaction effect between devices and SNR conditions was non-significant. Hearing-impaired children performed significantly better at SNR 10 dB (mean, 40.44; standard deviation [SD], 14.12) than at SNR 0 dB (mean, 25.0; SD, 12.98; F=12.67; P<0.001). Furthermore, performance was significantly better for children with CIs (SNR 10: mean, 49.44, SD, 13.90; SNR 0: mean, 31.95, SD, 15.72) than for children with HAs (SNR 10: mean, 33.33, SD, 9.72; SNR 0: mean, 19.52, SD, 6.67; F=14.57; P<0.05) under the two situations. However, no significant interaction (F=1.56, P=0.22) between devices and noisy situations was found (Fig. 1). These findings showed that speech recognition was better for children with CIs than children using HAs. In addition, all children had better results at SNR 10 than SNR 0 condition. No relation was found between the independent variables (devices and SNR conditions).

The Speech Perception in Noise (SPIN) performance of children with cochlear implants (CIs) and hearing aids (HAs) under the two conditions. SNR, signal-to-noise ratio.

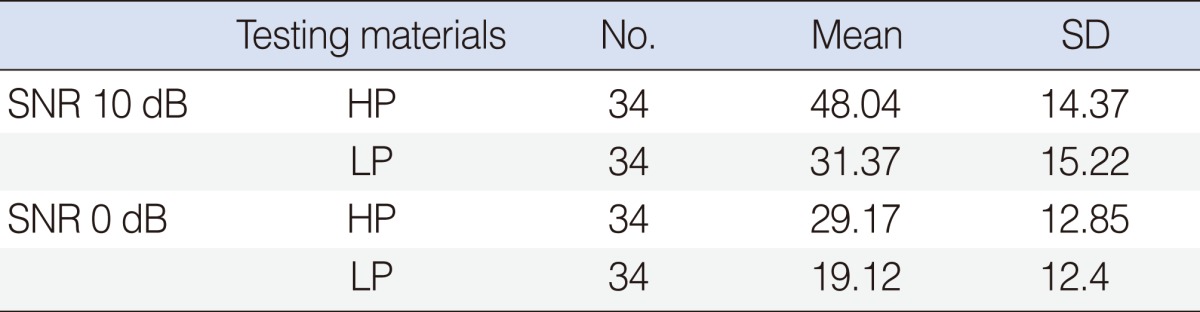

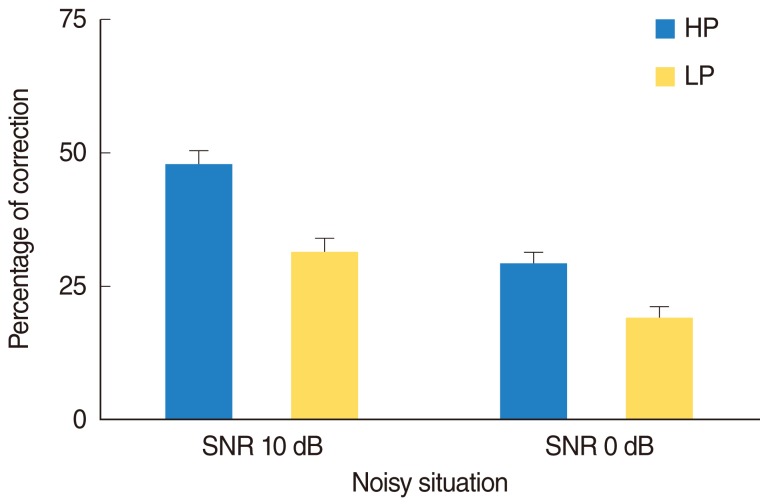

Two-way ANOVA (Table 3) showed the main effects, contextual cues and SNR conditions, on speech recognition were significant, and that the interaction between contextual cues and SNR conditions was also significant. The performance of hearing impaired children for HP sentences (mean, 38.6; SD, 12.66) was significantly better than for LP sentences (mean, 25.25; SD, 12.93) (F=115.79, P<0.001), and an interaction was found between noise level and contextual cues in sentences (F=8.47, P<0.01). This meant children's results for HP and LP sentences at SNR 0 dB are more different than at SNR 10 dB (Table 3, Fig. 2), and that speech recognition performance to different levels of contextual cues (HP/LP) is affected by different signal-noise ratios. Furthermore, contextual cues had a smaller effect under SNR 10 than under SNR 0.

DISCUSSION

The present study shows that environmental noise significantly influences speech recognition performance in children with severe/profound hearing impairment. Children with CIs performed better than children with HAs under SNR 10 dB and SNR 0 dB conditions. The accuracy of speech recognition at SNR 10 dB was about 50% for CI children and 33.33% for HA children. These results concur with those of previous studies (4, 5).

Spoken sentences that provide contextual cues enhance the speech perception of children with severe/profound hearing impairments, especially in higher SNR situations. The accuracies of speech recognition under the noisier condition (SNR 0 dB) were 38.6% and 25.25% for HP and LP sentences, which is similar to that found by Lee et al. (6) and Elliott (7). Furthermore, an interaction was found between noisy level and contextual cues in sentences, meaning that the performance of hearing impaired children was much affected by the higher SNR situation, and suggesting that contextual cues substantially improve comprehension by hearing impaired children in noisy situations.

The present study demonstrates that background noise masks speech information and could disturb learning, and shows that high-predictability sentences are more easily understood in noisy environments. Some suggestions were as follows: 1) Increase SNR was necessary; 2) Teachers' conversation was followed the major topics and gave the clear cues of speechreading; 3) The FM system was suggested if necessary.

Notes

No potential conflict of interest relevant to this article was reported.